Installing Kubernetes on Google Cloud & Cluster Hardening

Installing Kubernetes on Google Cloud Platform and Cluster Hardening

This is a documentation of the lab "Kubernetes the hard way" by Kelsey Hightower. A massive thank you to Kelsey Hightower for putting this guide together.It is highly recommended to check the original lab as there are detailed explanations to every step of the process. In this documentation, explanations will be kept brief.

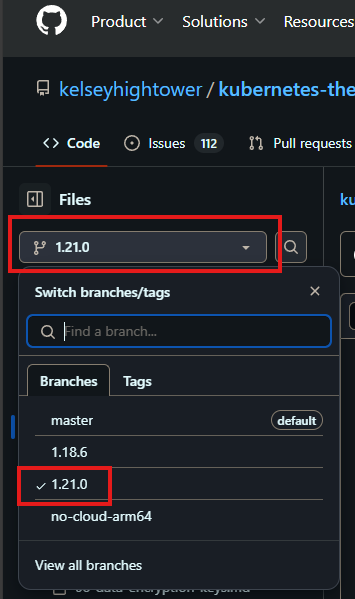

The version of "Kubernetes the hard way" this documentation covers is the 1.21.0 of the repository as it is the most recent version that makes use of the google cloud.

This is a documentation of my personal experience with "Kubernetes the hard way" with some added steps as solutions to parts that didn't work for me from the original lab.

Normally there would be costs involved for deploying the required instances on the google cloud platform. Google however, offers free trial credits upon sign-up to the cloud platform that we can make use of for this lab.

This lab was followed on a on a Kali Linux VM. For other operating systems, check the original labs documentations.

Learning outcomes

- Learn how to create and manage instances on the Google Cloud Platform (GCP) with the gcloud command line tool

- Gain a deep understanding of the steps required to set up the Kubernetes components from scratch

- Learn how to create and manage Kubernetes clusters with the kubectl command

- Apply Kubernetes Best-Practices

- Learn how to secure and monitor Kubernetes clusters

Quicklinks

- Prerequisites

- Installing the Client Tools

- Provisioning Compute Resources

- Provisioning a CA and Generating TLS Certificates

- Generating Kubernetes Configuration Files for Authentication

- Generating the Data Encryption Config and Key

- Bootstrapping the etcd Cluster

- Bootstrapping the Kubernetes Control Plane

- Bootstrapping the Kubernetes Worker Nodes

- Configuring kubectl for Remote Access

- Provisioning Pod Network Routes

- Deploying the DNS Cluster Add-on

- Smoke Test

After completing the lab of Kubernetes the hard way, we can look at labs for security best practices and cluster hardening. The following labs are available freely on the TryHackMe platform.

Creating an account on the Google Cloud Platform (GCP)

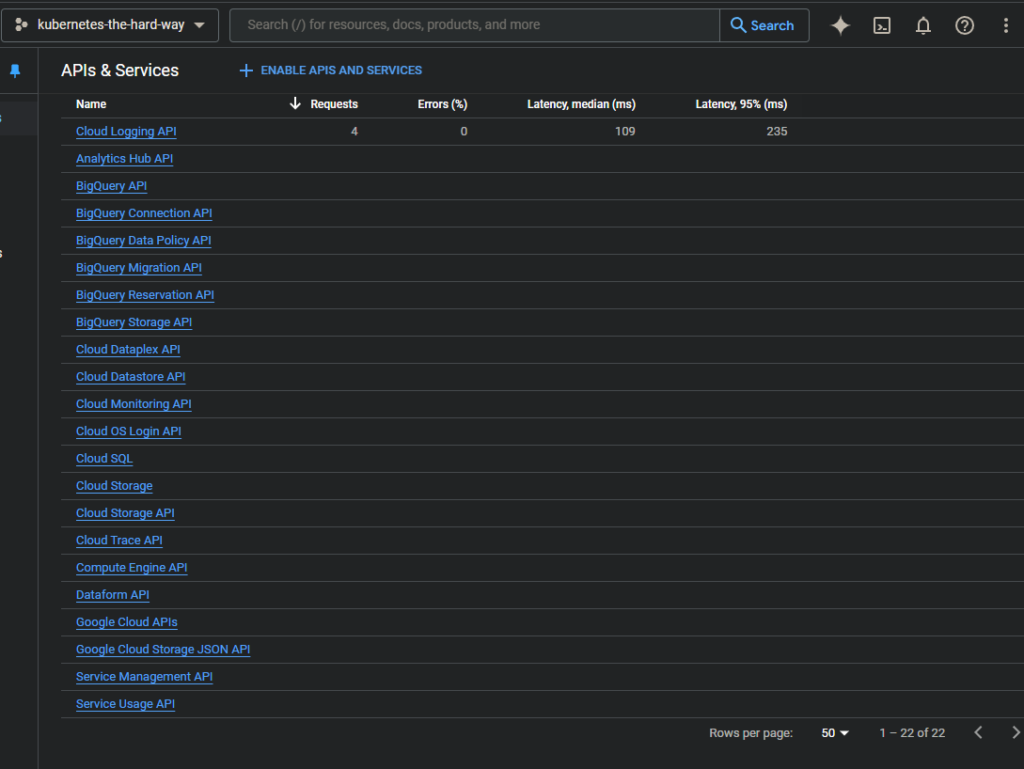

In order to create instances on the Google Cloud Platform we will first need to create an account. With the creation of new accounts, the Google Cloud Platform offers over £200 in free credits which we can use to create our instances and follow this lab.The sign-up process should be pretty straight forward. Once the sign-up is complete, while still on the GCP website, head over to "APIs and Services" and make sure the following APIs and Services are enabled.

Installing the Google Cloud SDK

The steps to install and configure the gcloud command line utility can be found in the official documentation.

# Verify the Google Cloud SDK version is 338.0.0 or higher

gcloud version

# Authorize gcloud to access the Cloud Platform with your Google user credentials

gcloud auth login

# List compute regions

gcloud compute regions list

# Set compute region

gcloud config set compute/region [region_name]

# List compute zones

gcloud compute zones list

# Set compute zone (Note: compute zone must be part of the selected compute region)

gcloud config set compute/zone [zone_name]

Running Commands in Parallel with tmux

Due to the need to run the same commands multiple times on different instances, we can use tmux to synchronize several shells connected to different instances.

# Update the machine

sudo apt update && sudo apt upgrade -y && sudo apt autoremove

# Install tmux

sudo apt install tmux

Installing the Client tools

The command line utilities required for this lab are: cfssl, cfssljson, and kubectl.Installing CFSSL

Thecfssl and cfssljson command line utilities will be used to provision a PKI infrastructure and generate TLS certificates.

# Download and install cfssl and cfssljson (Linux)

wget -q --show-progress --https-only --timestamping \

https://storage.googleapis.com/kubernetes-the-hard-way/cfssl/1.4.1/linux/cfssl \

https://storage.googleapis.com/kubernetes-the-hard-way/cfssl/1.4.1/linux/cfssljson

# Give executable permissions

chmod +x cfssl cfssljson

# Move the to /usr/local/bin/

sudo mv cfssl cfssljson /usr/local/bin/

Verification

# Verify cfssl and cfssljson are version 1.4.1 or higher

cfssl version

cfssljson --version

Installing kubectl

The kubectl command line utility will be used to interact with the Kubernetes API Server.

# Install kubectl from the official release binaries

wget https://storage.googleapis.com/kubernetes-release/release/v1.21.0/bin/linux/amd64/kubectl

chmod +x kubectl

sudo mv kubectl /usr/local/bin/

Verification

# Verify kubectl version is 1.21.0 or higher

kubectl version --client

In case the steps to install the kubectl tool did not work, installing it with the apt package manager should also work for this guide.

# Install kubectl with apt

sudo apt install kubectl

Provisioning Compute Resources on the Google Cloud Platform (GCP)

In the original guide by kelseyhightower's "Kubernetes the hard way", he is creating three control plane nodes and three worker nodes. As we are using a free trial of the Google Cloud Platform, we are limited to creating only four instances. Therefore, we'll be creating a cluster of two control plane nodes and two worker nodes.Creating a Virtual Private Cloud Network (VPC) on the Google Cloud Platform (GPC)

For a Kubernetes cluster to work, all components need to be deployed on the same network and subnet. So before we can provision the compute instances we first need to create a Virtual Private Cloud network. Here can find out more about Cluster Networking, Subnets and Network Policies.

# Create the "kubernetes-the-hard-way" custom Virtual Private Network

gcloud compute networks create kubernetes-the-hard-way --subnet-mode custom

# Create the "kubernetes-the-hard-way" subnet

gcloud compute networks subnets create kubernetes \

--network kubernetes-the-hard-way \

--range 10.240.0.0/24

Creating Firewall Rules

# Create a firewall rule that allows internal communication across all protocols

gcloud compute firewall-rules create kubernetes-the-hard-way-allow-internal \

--allow tcp,udp,icmp \

--network kubernetes-the-hard-way \

--source-ranges 10.240.0.0/24,10.200.0.0/16

# Create a firewall rule that allows external SSH, ICMP, and HTTPS

gcloud compute firewall-rules create kubernetes-the-hard-way-allow-external \

--allow tcp:22,tcp:6443,icmp \

--network kubernetes-the-hard-way \

--source-ranges 0.0.0.0/0

Verification

# List the newly created firewall rules

gcloud compute firewall-rules list --filter="network:kubernetes-the-hard-way"

Creating Compute Instances

# Create two controller nodes

for i in 0 1; do

gcloud compute instances create controller-${i} \

--async \

--boot-disk-size 200GB \

--can-ip-forward \

--image-family ubuntu-2004-lts \

--image-project ubuntu-os-cloud \

--machine-type e2-standard-2 \

--private-network-ip 10.240.0.1${i} \

--scopes compute-rw,storage-ro,service-management,service-control,logging-write,monitoring \

--subnet kubernetes \

--tags kubernetes-the-hard-way,controller

done

# Create two worker nodes

for i in 0 1; do

gcloud compute instances create worker-${i} \

--async \

--boot-disk-size 200GB \

--can-ip-forward \

--image-family ubuntu-2004-lts \

--image-project ubuntu-os-cloud \

--machine-type e2-standard-2 \

--metadata pod-cidr=10.200.${i}.0/24 \

--private-network-ip 10.240.0.2${i} \

--scopes compute-rw,storage-ro,service-management,service-control,logging-write,monitoring \

--subnet kubernetes \

--tags kubernetes-the-hard-way,worker

done

Verification

# List the compute instances

gcloud compute instances list --filter="tags.items=kubenretes-the-hard-way"

Configuring SSH Access

SSH will be used to connect to and configure the nodes on the cluster. When connecting to the compute instances for the first time, SSH keys will be generated and stored in the project or instance metadata. More information can be found at the connecting to instances documentation.

# Test SSH access to the controller-0 and enter a passphrase when prompted

gcloud cumpute ssh controller-0

# Exit the controller-0 instance

exit

Provisioning a Certificate Authority (CA) and Generating TLS Certificates

In this section we will provision a PKI infrastructure using Cloudflare's PKI toolkit cfssl, then use it to bootstrap a Certificate Authority, and generate TLS certificates for the kubernetes components.Certificate Authority (CA)

# Generate the CA configuration file, certificate, and private key

{

cat > ca-config.json <<EOF

{

"signing": {

"default": {

"expiry": "8760h"

},

"profiles": {

"kubernetes": {

"usages": ["signing", "key encipherment", "server auth", "client auth"],

"expiry": "8760h"

}

}

}

}

EOF

cat > ca-csr.json <<EOF

{

"CN": "Kubernetes",

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "US",

"L": "Portland",

"O": "Kubernetes",

"OU": "CA",

"ST": "Oregon"

}

]

}

EOF

cfssl gencert -initca ca-csr.json | cfssljson -bare ca

}

Client and Server Certificates

Admin Client Certificates

# Generate the admin client certificate and private key

{

cat > admin-csr.json << EOF

{

"CN": "admin",

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "US",

"L": "Portland",

"O": "system:masters",

"OU": "Kubernetes The Hard Way",

"ST": "Oregon"

}

]

}

EOF

cfssl gencert \

-ca=ca.pem \

-ca-key=ca-key.pem \

-config=ca-config.json \

-profile=kubernetes \

admin-csr.json | cfssljson -bare admin

}

Kubelet Client Cirtificates

Node Authorizer is a special-purpose authorization mode that specifically authorizes API requests made by Kubelets.

# Generate a certificate and private key for each Kubernetes worker node

for instance in worker-0 worker-1; do

cat > ${instance}-csr.json <<EOF

{

"CN": "system:node:${instance}",

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "US",

"L": "Portland",

"O": "system:nodes",

"OU": "Kubernetes The Hard Way",

"ST": "Oregon"

}

]

}

EOF

EXTERNAL_IP=$(gcloud compute instances describe ${instance} \

--format 'value(networkInterfaces[0].accessConfigs[0].natIP)')

INTERNAL_IP=$(gcloud compute instances describe ${instance} \

--format 'value(networkInterfaces[0].networkIP)')

cfssl gencert \

-ca=ca.pem \

-ca-key=ca-key.pem \

-config=ca-config.json \

-hostname=${instance},${EXTERNAL_IP},${INTERNAL_IP} \

-profile=kubernetes \

${instance}-csr.json | cfssljson -bare ${instance}

done

Controller Manager Client Certificate

# Generate the kube-controller-manager client certificate and private key

{

cat > kube-controller-manager-csr.json <<EOF

{

"CN": "system:kube-controller-manager",

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "US",

"L": "Portland",

"O": "system:kube-controller-manager",

"OU": "Kubernetes The Hard Way",

"ST": "Oregon"

}

]

}

EOF

cfssl gencert \

-ca=ca.pem \

-ca-key=ca-key.pem \

-config=ca-config.json \

-profile=kubernetes \

kube-controller-manager-csr.json | cfssljson -bare kube-controller-manager

}

The Kube Proxy Client Certificate

# Generate the kube-proxy client certificate and private key

{

cat > kube-proxy-csr.json <<EOF

{

"CN": "system:kube-proxy",

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "US",

"L": "Portland",

"O": "system:node-proxier",

"OU": "Kubernetes The Hard Way",

"ST": "Oregon"

}

]

}

EOF

cfssl gencert \

-ca=ca.pem \

-ca-key=ca-key.pem \

-config=ca-config.json \

-profile=kubernetes \

kube-proxy-csr.json | cfssljson -bare kube-proxy

}

The Scheduler Client Certificate

# Generate the kube-scheduler client certificate and private key

{

cat > kube-scheduler-csr.json <<EOF

{

"CN": "system:kube-scheduler",

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "US",

"L": "Portland",

"O": "system:kube-scheduler",

"OU": "Kubernetes The Hard Way",

"ST": "Oregon"

}

]

}

EOF

cfssl gencert \

-ca=ca.pem \

-ca-key=ca-key.pem \

-config=ca-config.json \

-profile=kubernetes \

kube-scheduler-csr.json | cfssljson -bare kube-scheduler

}

The Kubernetes API Server Certificate

# Generate the Kubernetes API Server certificate and private key

{

KUBERNETES_PUBLIC_ADDRESS=$(gcloud compute addresses describe kubernetes-the-hard-way \

--region $(gcloud config get-value compute/region) \

--format 'value(address)')

KUBERNETES_HOSTNAMES=kubernetes,kubernetes.default,kubernetes.default.svc,kubernetes.default.svc.cluster,kubernetes.svc.cluster.local

cat > kubebernetes-csr.json <<EOF

{

"CN": "kubernetes",

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "US",

"L": "Portland",

"O": "Kubernetes",

"OU": "Kubernetes The Hard Way",

"ST": "Oregon"

}

]

}

EOF

cfssl gencert \

-ca=ca.pem \

-ca-key=ca-key.pem \

-config=ca-config.json \

-hostname=10.32.0.1,10.240.0.10,10.240.0.11,10.240.0.12,${KUBERNETES_PUBLIC_ADDRESS},127.0.0.1,${KUBERNETES_HOSTNAMES} \

-profile=kubernetes \

kubernetes-csr.json | cfssljson -bare kubernetes

}

Service Account Key Pair

The Kubernetes Controller Manager uses a key pair to generate and sign service account tokens.

# Generate the service-account certificate and private key

{

cat > service-account-csr.json <<EOF

{

"CN": "service-accounts",

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "US",

"L": "Portland",

"O": "Kubernetes",

"OU": "Kubernetes The Hard Way",

"ST": "Oregon"

}

]

}

EOF

cfssl gencert \

-ca=ca.pem \

-ca-key=ca-key.pem \

-config=ca-config.json \

-profile=kubernetes \

service-account-csr.json | cfssljson -bare service-account

}

Distribute the Client & Server Certificates

# Copy the certificates and private key to each worker node

for instance in worker-0 worker-1; do

gcloud compute scp ca.pem ${instance}-key.pem ${instance}.pem ${instance}:~/

done

# Copy the certificates and private key to each controller node

for instance in controller-0 controller-1; do

gcloud compute scp ca.pem ca-key.pem kubernetes-key.pem kubernetes.pem \

service-account-key.pem service-account.pem ${instance}:~/

done

Generating Kubernetes Configuration Files for Authentication

Kubernetes Public IP Address

# Retrieve the kubernetes-the-hard-way static IP address

KUBERNETES_PUBLIC_ADDRESS=$(gcloud compute addresses describe kubernetes-the-hard-way \

--region $(gcloud config get-value compute/region) \

--format 'value(address)')

The kubelet Kubernetes Configuration File

The following commands must be run in the same directory used to generate the SSL certificates during the Generating TLS Certificates lab.

# Generate a kubeconfig for each worker node

for instance in worker-0 worker-1; do

kubectl config set-cluster kubernetes-the-hard-way \

--certificate-authority=ca.pem \

--embed-certs=true \

--server=https://${KUBERNETES_PUBLIC_ADDRESS}:6443 \

--kubeconfig=${instance}.kubeconfig

kubectl config set-credentials system:node:${instance} \

--client-certificate=${instance}.pem \

--client-key=${instance}-key.pem \

--embed-certs=true \

--kubeconfig=${instance}.kubeconfig

kubectl config set-context default \

--cluster=kubernetes-the-hard-way \

--user=system:node:${instance} \

--kubeconfig=${instance}.kubeconfig

kubectl config use-context default --kubeconfig=${instance}.kubeconfig

done

The kube-proxy Kubernetes Configuration File

# Generate a kubeconfig for the kube-proxy service

{

kubectl config set-cluster kubernetes-the-hard-way \

--certificate-authority=ca.pem \

--embed-certs=true \

--server=https://${KUBERNETES_PUBLIC_ADDRESS}:6443 \

--kubeconfig=kube-proxy.kubeconfig

kubectl config set-credentials system:kube-proxy \

--client-certificate=kube-proxy.pem \

--client-key=kube-proxy-key.pem \

--embed-certs=true \

--kubeconfig=kube-proxy.kubeconfig

kubectl config set-context default \

--cluster=kubernetes-the-hard-way \

--user=system:kube-proxy \

--kubeconfig=kube-proxy.kubeconfig

kubectl config use-context default --kubeconfig=kube-proxy.kubeconfig

}

The kube-controller-manager Kubernetes Configuration File

# Generate a kubeconfig for the kube-controller-manager service

{

kubectl config set-cluster kubernetes-the-hard-way \

--certificate-authority=ca.pem \

--embed-certs=true \

--server=https://127.0.0.1:6443 \

--kubeconfig=kube-controller-manager.kubeconfig

kubectl config set-credentials system:kube-controller-manager \

--client-certificate=kube-controller-manager.pem \

--client-key=kube-controller-manager-key.pem \

--embed-certs=true \

--kubeconfig=kube-controller-manager.kubeconfig

kubectl config set-context default \

--cluster=kubernetes-the-hard-way \

--user=system:kube-controller-manager \

--kubeconfig=kube-controller-manager.kubeconfig

kubectl config use-context default --kubeconfig=kube-controller-manager.kubeconfig

}

The kube-scheduler Kubernetes Configuration File

# Generate a kubeconfig for the kube-scheduler service

{

kubectl config set-cluster kubernetes-the-hard-way \

--certificate-authority=ca.pem \

--embed-certs=true \

--server=https://127.0.0.1:6443 \

--kubeconfig=kube-scheduler.kubeconfig

kubectl config set-credentials system:kube-scheduler \

--client-certificate=kube-scheduler.pem \

--client-key=kube-scheduler-key.pem \

--embed-certs=true \

--kubeconfig=kube-scheduler.kubeconfig

kubectl config set-context default \

--cluster=kubernetes-the-hard-way \

--user=system:kube-scheduler \

--kubeconfig=kube-scheduler.kubeconfig

kubectl config use-context default --kubeconfig=kube-scheduler.kubeconfig

}

The admin Kubernetes Configuration File

# Generate a kubeconfig for the admin user

{

kubectl config set-cluster kubernetes-the-hard-way \

--certificate-authority=ca.pem \

--embed-certs=true \

--server=https://127.0.0.1:6443 \

--kubeconfig=admin.kubeconfig

kubectl config set-credentials admin \

--client-certificate=admin.pem \

--client-key=admin-key.pem \

--embed-certs=true \

--kubeconfig=admin.kubeconfig

kubectl config set-context default \

--cluster=kubernetes-the-hard-way \

--user=admin \

--kubeconfig=admin.kubeconfig

kubectl config use-context default --kubeconfig=admin.kubeconfig

}

Distribute the Kubernetes Configuration Files

# Copy the kubelet and kube-proxy kubeconfig files to each worker node

for instance in worker-0 worker-1; do

gcloud compute scp ${instance}.kubeconfig kube-proxy.kubeconfig ${instance}:~/

done

# Copy the kube-controller-manager and kube-scheduler kubeconfig files to each controller node

for instance in controller-0 controller-1; do

gcloud compute scp admin.kubeconfig kube-controller-manager.kubeconfig kube-scheduler.kubeconfig ${instance}:~/

done

Generating the Data Encryption Configuration and Key

Kubernetes supports the ability to encrypt cluster data at rest.Encryption Key

# Generate the encryption-config.yaml encryption config file

cat > encryption-config.yaml <<EOF

kind: EncryptionConfig

apiVersion: v1

resources:

- resources:

- secrets

providers:

- aescbc:

keys:

- name: key1

secret: ${ENCRYPTION_KEY}

- identity: {}

EOF

# Copy the encryption-config.yaml encryption config file to each controller node

for instance in controller-0 controller-1; do

gcloud compute scp encryption-config.yaml ${instance}:~/

done

Bootstrapping the etcd Cluster

Prerequisites

The commands in this section must be run in each controller node. With tmux we can run commands in parallel to each other by synchronizing multiple panes.

# Start tmux

tmux

# Split terminal vertically

ctrl + B, %

# Login to controller-0 (repeat for controller-1 on the next pane)

gcloud compute ssh controller-0

After logging into both controllers from each pane, synchronize the panes by pressing the prefix shortcut ctrl+B then typing ":setw synchronize-panes".

The same command can be used to disable the synchronization of panes.

# Synchronize panes

(prefix ctrl+B) :setw synchronize-panes

Bootstrapping an etcd Cluster Member

# Download the official etcd release binaries from the etcd GitHub project

wget -q --show-progress --https-only --timestamping \

"https://github.com/etcd-io/etcd/releases/download/v3.4.15/etcd-v3.4.15-linux-amd64.tar.gz"

# Extract and install the etcd server and the etcdctl command line utility

{

tar -xvf etcd-v3.4.15-linux-amd64.tar.gz

sudo mv etcd-v3.4.15-linux-amd64/etcd* /usr/local/bin/

}

# Configure the etcd Server

{

sudo mkdir -p /etc/etcd /var/lib/etcd

sudo chmod 700 /var/lib/etcd

sudo cp ca.pem kubernetes-key.pem kubernetes.pem /etc/etcd/

}

# Retrieve the internal IP address for the current compute instance

INTERNAL_IP=$(curl -s -H "Metadata-Flavor: Google" \

http://metadata.google.internal/computeMetadata/v1/instance/network-interfaces/0/ip)

# Set the etcd name to match the hostname of the current compute instance

ETCD_NAME=$(hostname -s)

# Create the etcd.service systemd unit file

cat <<EOF | sudo tee /etc/systemd/system/etcd.service

[Unit]

Description=etcd

Documentation=https://github.com/coreos

[Service]

Type=notify

ExecStart=/usr/local/bin/etcd \\

--name ${ETCD_NAME} \\

--cert-file=/etc/etcd/kubernetes.pem \\

--key-file=/etc/etcd/kubernetes-key.pem \\

--peer-cert-file=/etc/etcd/kubernetes.pem \\

--peer-key-file=/etc/etcd/kubernetes-key.pem \\

--trusted-ca-file=/etc/etcd/ca.pem \\

--peer-trusted-ca-file=/etc/etcd/ca.pem \\

--peer-client-cert-auth \\

--client-cert-auth \\

--initial-advertise-peer-urls https://${INTERNAL_IP}:2380 \\

--listen-peer-urls https://${INTERNAL_IP}:2380 \\

--listen-client-urls https://${INTERNAL_IP}:2379,https://127.0.0.1:2379 \\

--advertise-client-urls https://${INTERNAL_IP}:2379 \\

--initial-cluster-token etcd-cluster-0 \\

--initial-cluster controller-0=https://10.240.0.10:2380,controller-1=https://10.240.0.11:2380 \\

--initial-cluster-state new \\

--data-dir=/var/lib/etcd

Restart=on-failure

RestartSec=5

[Install]

WantedBy=multi-user.target

EOF

# Start the etcd Server

{

sudo systemctl daemon-reload

sudo systemctl enable etcd

sudo systemctl start etcd

}

Verification

# List the etcd cluster members

sudo ETCDCTL_API=3 etcdctl member list \

--endpoints=https://127.0.0.1:2379 \

--cacert=/etc/etcd/ca.pem \

--cert=/etc/etcd/kubernetes.pem \

--key=/etc/etcd/kubernetes-key.pem

Bootstrapping the Kubernetes Control Plane

In this section of the lab we will bootstrap the Kubernetes control plane across two compute instances and configure it for high availability. We will also create an external load balancer that exposes the Kubernetes API Servers to remote clients.Prerequisites

The commands in part of the lab must be run on each controller node. Use tmux to synchronize the panes after successfully logged in into each controller node.

# Log in to controller-0 (repeat for controller-1 on a separate tmux pane)

gcloud compute ssh controller-0

After logging into both controllers from each pane, synchronize the panes by pressing the prefix shortcut ctrl+B then typing ":setw synchronize-panes".

The same command can be used to disable the synchronization of panes.

# Synchronize panes

(prefix ctrl+B) :setw synchronize-panes

Provision the Kubernetes Control Plane

# Create the Kubernetes configuration directory

sudo mkdir -p /etc/kubernetes/config

Download and install the Kubernetes Controller Binaries

# Download the official Kubernetes release binaries

wget -q --show-progress --https-only --timestamping \

"https://storage.googleapis.com/kubernetes-release/release/v1.21.0/bin/linux/amd64/kube-apiserver" \

"https://storage.googleapis.com/kubernetes-release/release/v1.21.0/bin/linux/amd64/kube-controller-manager" \

"https://storage.googleapis.com/kubernetes-release/release/v1.21.0/bin/linux/amd64/kube-scheduler" \

"https://storage.googleapis.com/kubernetes-release/release/v1.21.0/bin/linux/amd64/kubectl"

# Install the Kubernetes libraries

{

chmod +x kube-apiserver kube-controller-manager kube-scheduler kubectl

sudo mv kube-apiserver kube-controller-manager kube-scheduler kubectl /usr/local/bin/

}

Configuring the Kubernetes API Server

# Configure the Kubernetes directories

{

sudo mkdir -p /var/lib/kubernetes/

sudo mv ca.pem ca-key.pem kubernetes-key.pem kubernetes.pem \

service-account-key.pem service-account.pem \

encryption-config.yaml /var/lib/kubernetes/

}

# Retrieve the internal IP address for the current compute instance

INTERNAL_IP=$(curl -s -H "Metadata-Flavor: Google" \

http://metadata.google.internal/computeMetadata/v1/instance/network-interfaces/0/ip)

REGION=$(curl -s -H "Metadata-Flavor: Google" \

http://metadata.google.internal/computeMetadata/v1/project/attributes/google-compute-default-region)

KUBERNETES_PUBLIC_ADDRESS=$(gcloud compute addresses describe kubernetes-the-hard-way \

--region $REGION \

--format 'value(address)')

# Create the kube-apiserver.service systemd unit file

cat <<EOF | sudo tee /etc/systemd/system/kube-apiserver.service

[Unit]

Description=Kubernetes API Server

Documentation=https://github.com/kubernetes/kubernetes

[Service]

ExecStart=/usr/local/bin/kube-apiserver \\

--advertise-address=${INTERNAL_IP} \\

--allow-privileged=true \\

--apiserver-count=3 \\

--audit-log-maxage=30 \\

--audit-log-maxbackup=3 \\

--audit-log-maxsize=100 \\

--audit-log-path=/var/log/audit.log \\

--authorization-mode=Node,RBAC \\

--bind-address=0.0.0.0 \\

--client-ca-file=/var/lib/kubernetes/ca.pem \\

--enable-admission-plugins=NamespaceLifecycle,NodeRestriction,LimitRanger,ServiceAccount,DefaultStorageClass,ResourceQuota \\

--etcd-cafile=/var/lib/kubernetes/ca.pem \\

--etcd-certfile=/var/lib/kubernetes/kubernetes.pem \\

--etcd-keyfile=/var/lib/kubernetes/kubernetes-key.pem \\

--etcd-servers=https://10.240.0.10:2379,https://10.240.0.11:2379 \\

--event-ttl=1h \\

--encryption-provider-config=/var/lib/kubernetes/encryption-config.yaml \\

--kubelet-certificate-authority=/var/lib/kubernetes/ca.pem \\

--kubelet-client-certificate=/var/lib/kubernetes/kubernetes.pem \\

--kubelet-client-key=/var/lib/kubernetes/kubernetes-key.pem \\

--runtime-config='api/all=true' \\

--service-account-key-file=/var/lib/kubernetes/service-account.pem \\

--service-account-signing-key-file=/var/lib/kubernetes/service-account-key.pem \\

--service-account-issuer=https://${KUBERNETES_PUBLIC_ADDRESS}:6443 \\

--service-cluster-ip-range=10.32.0.0/24 \\

--service-node-port-range=30000-32767 \\

--tls-cert-file=/var/lib/kubernetes/kubernetes.pem \\

--tls-private-key-file=/var/lib/kubernetes/kubernetes-key.pem \\

--v=2

Restart=on-failure

RestartSec=5

[Install]

WantedBy=multi-user.target

EOF

Configure the Kubernetes Controller Manager

# Move the kube-controller-manager kubeconfig into place

sudo mv kube-controller-manager.kubeconfig /var/lib/kubernetes/

# Create the kube-controller-manager.service systemd unit file

cat <<EOF | sudo tee /etc/systemd/system/kube-controller-manager.service

[Unit]

Description=Kubernetes Controller Manager

Documentation=https://github.com/kubernetes/kubernetes

[Service]

ExecStart=/usr/local/bin/kube-controller-manager \\

--bind-address=0.0.0.0 \\

--cluster-cidr=10.200.0.0/16 \\

--cluster-name=kubernetes \\

--cluster-signing-cert-file=/var/lib/kubernetes/ca.pem \\

--cluster-signing-key-file=/var/lib/kubernetes/ca-key.pem \\

--kubeconfig=/var/lib/kubernetes/kube-controller-manager.kubeconfig \\

--leader-elect=true \\

--root-ca-file=/var/lib/kubernetes/ca.pem \\

--service-account-private-key-file=/var/lib/kubernetes/service-account-key.pem \\

--service-cluster-ip-range=10.32.0.0/24 \\

--use-service-account-credentials=true \\

--v=2

Restart=on-failure

RestartSec=5

[Install]

WantedBy=multi-user.target

EOF

Configure the Kubernetes Scheduler

# Move the kube-scheduler kubeconfig into place

sudo mv kube-scheduler.kubeconfig /var/lib/kubernetes/

# Create the kube-scheduler.yaml configuration file

cat <<EOF | sudo tee /etc/kubernetes/config/kube-scheduler.yaml

apiVersion: kubescheduler.config.k8s.io/v1beta1

kind: KubeSchedulerConfiguration

clientConnection:

kubeconfig: "/var/lib/kubernetes/kube-scheduler.kubeconfig"

leaderElection:

leaderElect: true

EOF

# Create the kube-scheduler.service systemd unit file

cat <<EOF | sudo tee /etc/systemd/system/kube-scheduler.service

[Unit]

Description=Kubernetes Scheduler

Documentation=https://github.com/kubernetes/kubernetes

[Service]

ExecStart=/usr/local/bin/kube-scheduler \\

--config=/etc/kubernetes/config/kube-scheduler.yaml \\

--v=2

Restart=on-failure

RestartSec=5

[Install]

WantedBy=multi-user.target

EOF

# Start the Controller Services

{

sudo systemctl daemon-reload

sudo systemctl enable kube-apiserver kube-controller-manager kube-scheduler

sudo systemctl start kube-apiserver kube-controller-manager kube-scheduler

}

Allow up to 10 seconds for the Kubernetes API Server to fully initialize.

Enable HTTP Health Checks

A Google Network Load Balancer will be used to distribute traffic across both API servers and allow each API server to terminate TLS connections and validate certificates. Only HTTP health checks are supported by the load balancer which means that the HTTOS endpoint exposed by the API server cannot be used. As a workaround the nginx webserver can be used to proxy HTTP health checks. In this section we will install nginx and configure it to accept HTTP health checks on port 80 and proxy the connections to the API server on https://127.0.0.1:6443/healthz.

# Install a basic web server to handle HTTP health checks

sudo apt-get update

sudo apt-get install -y nginx

cat > kubernetes.default.svc.cluster.local <<EOF

server {

listen 80;

server_name kubernetes.default.svc.cluster.local;

location /healthz {

proxy_pass https://127.0.0.1:6443/healthz;

proxy_ssl_trusted_certificate /var/lib/kubernetes/ca.pem;

}

}

EOF

{

sudo mv kubernetes.default.svc.cluster.local \

/etc/nginx/sites-available/kubernetes.default.svc.cluster.local

sudo ln -s /etc/nginx/sites-available/kubernetes.default.svc.cluster.local /etc/nginx/sites-enabled/

}

sudo systemctl restart nginx

sudo systemctl enable nginx

Verification

kubectl cluster-info --kubeconfig admin.kubeconfig

Kubernetes control plane is running at https://127.0.0.1:6443

# Test the nginx HTTP health check proxy

curl -H "Host: kubernetes.default.svc.cluster.local" -i http://127.0.0.1/healthz

The above commands need to be run on each controller node (controller-0, controller-1)

RBAC for Kubelet Authorization

In this section we will configure RBAC permissions to allow the Kubernetes API Server to access the Kubelet API on each worker node. Access to the Kubelet API is required for retrieving metrics, logs and executing commands in pods.The commands in this section only need to be run on one controller node and will effect the entire cluster.

# Create the system:kube-apiserver-to-kubelet ClusterRole with permissions to access the Kubelet API and perform most common tasks associated with managing pods.

cat <<EOF | kubectl apply --kubeconfig admin.kubeconfig -f -

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

annotations:

rbac.authorization.kubernetes.io/autoupdate: "true"

labels:

kubernetes.io/bootstrapping: rbac-defaults

name: system:kube-apiserver-to-kubelet

rules:

- apiGroups:

- ""

resources:

- nodes/proxy

- nodes/stats

- nodes/log

- nodes/spec

- nodes/metrics

verbs:

- "*"

EOF

The Kubernetes API Server authenticates to the Kubelet as the kubernetes user using the client certificate as defined by the --kubelet-client-certificate flag.

# Bind the system:kube-apiserver-to-kubelet ClusterRole to the kubernetes user

cat <<EOF | kubectl apply --kubeconfig admin.kubeconfig -f -

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: system:kube-apiserver

namespace: ""

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: system:kube-apiserver-to-kubelet

subjects:

- apiGroup: rbac.authorization.k8s.io

kind: User

name: kubernetes

EOF

The Kubernetes Frontend Balancer

Run the following commands from the same machine used to create the compute instances.Provision a Network Load Balancer

# Create the external load balancer network resources

{

KUBERNETES_PUBLIC_ADDRESS=$(gcloud compute addresses describe kubernetes-the-hard-way \

--region $(gcloud config get-value compute/region) \

--format 'value(address)')

gcloud compute http-health-checks create kubernetes \

--description "Kubernetes Health Check" \

--host "kubernetes.default.svc.cluster.local" \

--request-path "/healthz"

gcloud compute firewall-rules create kubernetes-the-hard-way-allow-health-check \

--network kubernetes-the-hard-way \

--source-ranges 209.85.152.0/22,209.85.204.0/22,35.191.0.0/16 \

--allow tcp

gcloud compute target-pools create kubernetes-target-pool \

--http-health-check kubernetes

gcloud compute target-pools add-instances kubernetes-target-pool \

--instances controller-0,controller-1,controller-2

gcloud compute forwarding-rules create kubernetes-forwarding-rule \

--address ${KUBERNETES_PUBLIC_ADDRESS} \

--ports 6443 \

--region $(gcloud config get-value compute/region) \

--target-pool kubernetes-target-pool

}

Verification

Run the following commands from the same machine used to create the compute instances.

# Retrieve the kubernetes-the-hard-way static IP address

KUBERNETES_PUBLIC_ADDRESS=$(gcloud compute addresses describe kubernetes-the-hard-way \

--region $(gcloud config get-value compute/region) \

--format 'value(address)')

# Make a HTTP request for the Kubernetes version info

curl --cacert ca.pem https://${KUBERNETES_PUBLIC_ADDRESS}:6443/version

Bootstrapping the Kubernetes Worker Nodes

In this section we will bootstrap the worker nodes. The following components will be installed on each node: runc, container networking plugins, containerd, kubelet, and kube-proxy.

# Log in to worker-0 (repeat for worker-1 on a separate rmux pane and synchronize the panes after logging into each worker node)

gcloud compute ssh worker-0

Provisioning a Kubernetes Worker Node

# Install the OS dependencies

{

sudo apt-get update

sudo apt-get -y install socat conntrack ipset

}

# Disable Swap

sudo swapon --show

# If output is empty then swap is not enabled. If swap is enabled run the following command to disable swap immediately

sudo swapoff -a

# Download and Install Worker Binaries

wget -q --show-progress --https-only --timestamping \

https://github.com/kubernetes-sigs/cri-tools/releases/download/v1.21.0/crictl-v1.21.0-linux-amd64.tar.gz \

https://github.com/opencontainers/runc/releases/download/v1.0.0-rc93/runc.amd64 \

https://github.com/containernetworking/plugins/releases/download/v0.9.1/cni-plugins-linux-amd64-v0.9.1.tgz \

https://github.com/containerd/containerd/releases/download/v1.4.4/containerd-1.4.4-linux-amd64.tar.gz \

https://storage.googleapis.com/kubernetes-release/release/v1.21.0/bin/linux/amd64/kubectl \

https://storage.googleapis.com/kubernetes-release/release/v1.21.0/bin/linux/amd64/kube-proxy \

https://storage.googleapis.com/kubernetes-release/release/v1.21.0/bin/linux/amd64/kubelet

# Create the installation directories

sudo mkdir -p \

/etc/cni/net.d \

/opt/cni/bin \

/var/lib/kubelet \

/var/lib/kube-proxy \

/var/lib/kubernetes \

/var/run/kubernetes

# Install the worker binaries

{

mkdir containerd

tar -xvf crictl-v1.21.0-linux-amd64.tar.gz

tar -xvf containerd-1.4.4-linux-amd64.tar.gz -C containerd

sudo tar -xvf cni-plugins-linux-amd64-v0.9.1.tgz -C /opt/cni/bin/

sudo mv runc.amd64 runc

chmod +x crictl kubectl kube-proxy kubelet runc

sudo mv crictl kubectl kube-proxy kubelet runc /usr/local/bin/

sudo mv containerd/bin/* /bin/

}

Configure CNI Networking

# Retrieve the Pod CIDR range for the current compute instance

POD_CIDR=$(curl -s -H "Metadata-Flavor: Google" \

http://metadata.google.internal/computeMetadata/v1/instance/attributes/pod-cidr)

# Create the bridge network configuration file

cat <<EOF | sudo tee /etc/cni/net.d/10-bridge.conf

{

"cniVersion": "0.4.0",

"name": "bridge",

"type": "bridge",

"bridge": "cnio0",

"isGateway": true,

"ipMasq": true,

"ipam": {

"type": "host-local",

"ranges": [

[{"subnet": "${POD_CIDR}"}]

],

"routes": [{"dst": "0.0.0.0/0"}]

}

}

EOF

# Create the loopback network configuration file

cat <<EOF | sudo tee /etc/cni/net.d/99-loopback.conf

{

"cniVersion": "0.4.0",

"name": "lo",

"type": "loopback"

}

EOF

Configure containerd

# Create the containerd configuration file

sudo mkdir -p /etc/containerd/

cat << EOF | sudo tee /etc/containerd/config.toml

[plugins]

[plugins.cri.containerd]

snapshotter = "overlayfs"

[plugins.cri.containerd.default_runtime]

runtime_type = "io.containerd.runtime.v1.linux"

runtime_engine = "/usr/local/bin/runc"

runtime_root = ""

EOF

# Create the containerd.service systemd unit file

cat <<EOF | sudo tee /etc/systemd/system/containerd.service

[Unit]

Description=containerd container runtime

Documentation=https://containerd.io

After=network.target

[Service]

ExecStartPre=/sbin/modprobe overlay

ExecStart=/bin/containerd

Restart=always

RestartSec=5

Delegate=yes

KillMode=process

OOMScoreAdjust=-999

LimitNOFILE=1048576

LimitNPROC=infinity

LimitCORE=infinity

[Install]

WantedBy=multi-user.target

EOF

Configure the Kubelet

{

sudo mv ${HOSTNAME}-key.pem ${HOSTNAME}.pem /var/lib/kubelet/

sudo mv ${HOSTNAME}.kubeconfig /var/lib/kubelet/kubeconfig

sudo mv ca.pem /var/lib/kubernetes/

}

# Create the kubelet-config.yaml configuration file

cat <<EOF | sudo tee /var/lib/kubelet/kubelet-config.yaml

kind: KubeletConfiguration

apiVersion: kubelet.config.k8s.io/v1beta1

authentication:

anonymous:

enabled: false

webhook:

enabled: true

x509:

clientCAFile: "/var/lib/kubernetes/ca.pem"

authorization:

mode: Webhook

clusterDomain: "cluster.local"

clusterDNS:

- "10.32.0.10"

podCIDR: "${POD_CIDR}"

resolvConf: "/run/systemd/resolve/resolv.conf"

runtimeRequestTimeout: "15m"

tlsCertFile: "/var/lib/kubelet/${HOSTNAME}.pem"

tlsPrivateKeyFile: "/var/lib/kubelet/${HOSTNAME}-key.pem"

EOF

# Create the kubelet.service systemd unit file

cat <<EOF | sudo tee /etc/systemd/system/kubelet.service

[Unit]

Description=Kubernetes Kubelet

Documentation=https://github.com/kubernetes/kubernetes

After=containerd.service

Requires=containerd.service

[Service]

ExecStart=/usr/local/bin/kubelet \\

--config=/var/lib/kubelet/kubelet-config.yaml \\

--container-runtime=remote \\

--container-runtime-endpoint=unix:///var/run/containerd/containerd.sock \\

--image-pull-progress-deadline=2m \\

--kubeconfig=/var/lib/kubelet/kubeconfig \\

--network-plugin=cni \\

--register-node=true \\

--v=2

Restart=on-failure

RestartSec=5

[Install]

WantedBy=multi-user.target

EOF

Configure the Kubernetes Proxy

sudo mv kube-proxy.kubeconfig /var/lib/kube-proxy/kubeconfig

# Create the kube-proxy-config.yaml configuration file

cat <<EOF | sudo tee /var/lib/kube-proxy/kube-proxy-config.yaml

kind: KubeProxyConfiguration

apiVersion: kubeproxy.config.k8s.io/v1alpha1

clientConnection:

kubeconfig: "/var/lib/kube-proxy/kubeconfig"

mode: "iptables"

clusterCIDR: "10.200.0.0/16"

EOF

# Create the kube-proxy.service systemd unit file

cat <<EOF | sudo tee /etc/systemd/system/kube-proxy.service

[Unit]

Description=Kubernetes Kube Proxy

Documentation=https://github.com/kubernetes/kubernetes

[Service]

ExecStart=/usr/local/bin/kube-proxy \\

--config=/var/lib/kube-proxy/kube-proxy-config.yaml

Restart=on-failure

RestartSec=5

[Install]

WantedBy=multi-user.target

EOF

The above commands must be run on each worker node.

Verification

Run the following commands from the same machine used to create the compute instances.

# List the registered Kubernetes nodes

gcloud compute ssh controller-0 \

--command "kubectl get nodes --kubeconfig admin.kubeconfig"

Configuring kubectl for Remote Access

In this section of the lab we will generate a kubeconfig file for the kubectl command line utility based on the admin user credentials.Verification

Each kubeconfig requires a Kubernetes API Server to connect to. To support high availability the IP address assigned to the external load balancer fronting the Kubernetes API Servers will be used.

# Generate a kubeconfig file suitable for authenticating as the admin user

{

KUBERNETES_PUBLIC_ADDRESS=$(gcloud compute addresses describe kubernetes-the-hard-way \

--region $(gcloud config get-value compute/region) \

--format 'value(address)')

kubectl config set-cluster kubernetes-the-hard-way \

--certificate-authority=ca.pem \

--embed-certs=true \

--server=https://${KUBERNETES_PUBLIC_ADDRESS}:6443

kubectl config set-credentials admin \

--client-certificate=admin.pem \

--client-key=admin-key.pem

kubectl config set-context kubernetes-the-hard-way \

--cluster=kubernetes-the-hard-way \

--user=admin

kubectl config use-context kubernetes-the-hard-way

}

Verification

# Check the version of the remote Kubernetes cluster

kubectl version

# List the nodes in the remote Kubernetes cluster

kubectl get nodes

Provisioning Pod Network Routes

In this section of the lab we will create a route for each worker node that maps the node's Pod CIDR range to the node's internal IP addressThe Routing Table

First we will need to gather information required to create routes in the kubernetes-the-hard-way VPC network.

# Print the internal IP address and Pod CIDR range for each worker instance

for instance in worker-0 worker-1; do

gcloud compute instances describe ${instance} \

--format 'value[separator=" "](networkInterfaces[0].networkIP,metadata.items[0].value)'

done

Routes

# Create network routes for each worker instance

for i in 0 1; do

gcloud compute routes create kubernetes-route-10-200-${i}-0-24 \

--network kubernetes-the-hard-way \

--next-hop-address 10.240.0.2${i} \

--destination-range 10.200.${i}.0/24

done

# List the routes in the kubernetes-the-hard-way VPC networks

gcloud compute routes list --filter "network: kubernetes-the-hard-way"

Deploying the DNS Cluster Add-on

In this lab we will deploy the DNS add-on which provides DNS based service discovery, backed by CoreDNS, to applications running inside the Kubernetes cluster.The DNS Cluster Add-on

# Deploy the coredns cluster add-on (step as presented in the original guide of kubernetes-the-hard-way)

kubectl apply -f https://storage.googleapis.com/kubernetes-the-hard-way/coredns-1.8.yaml

At the time of writing this documentation, the above command would not execute properly with an error message that the file doesn't exist.

As a workaround, we can use a trick posted by hkz-aarvesen in this article.

The trick used by hkz-aarvesen for this step was that he took the file for coredns1.7 instead and renamed it so it works with the rest of the configurations of this lab. In the following steps we will use the above method and replace the link to point to the coredns1.7 yaml file.

# get the 1.7.0 file (of the 1.18.6 version of the kubernetes-the-hard-way lab)

wget https://github.com/kelseyhightower/kubernetes-the-hard-way/blob/1.18.6/deployments/coredns-1.7.0.yaml

# fix it

cp coredns-1.7.0.yaml coredns-1.8.0.yaml

# Inside the coredns yaml file, scroll down to the "image" tag and replace the value with "coredns/coredns:1.8.0"

nano coredns-1.8.0.yaml

# apply it

kubectl apply -f coredns-1.8.0.yaml

# List the pods created by the kube-dns deployment

kubectl get pods -l k8s-app=kube-dns -n kube-system

Verification

# Crete a busybox deployment

kubect run busybox --image=busybox:1.28 --command -- sleep 3600

# List the pod created by the busybox deployment

kubectl get pods -l run=busybox

# Retrieve the full name of the busybox pod

POD_NAME=$(kubectl get pods -l run=busybox -o jsonpath="{.items[0].metadata.name}")

# Execute a DNS lookup for the kubernetes service inside the busybox pod

kubectl exec -ti $POD_NAME -- nslookup kubernetes

Smoke Test

In this section of the lab we will complete a series of tasks to ensure the Kubernetes cluster is functioning correctly.

Data Encryption

In this section you will verify the ability to encrypt secret data at rest.

# Create a generic secret

kubectl create secret generic kubernetes-the-hard-way \

--from-literal="mykey=mydata"

# Print a hexdump of the kubernetes-the-hard-way secret stored in etcd

gcloud compute ssh controller-0 \

--command "sudo ETCDCTL_API=3 etcdctl get \

--endpoints=https://127.0.0.1:2379 \

--cacert=/etc/etcd/ca.pem \

--cert=/etc/etcd/kubernetes.pem \

--key=/etc/etcd/kubernetes-key.pem\

/registry/secrets/default/kubernetes-the-hard-way | hexdump -C"

Deployments

In this section of the lab we will verify the ability to create and manage Deployments.

# Create a deployment for the nginx web server

kubectl create deployment nginx --image=nginx

kubectl get pods -l app=nginx

Deployments

In this section we will verify the ability to access applications remotely using port forwarding.

# Retrieve the full name of he nginx pod

POD_NAME=$(kubectl get pods -l app=nginx -o jsonpath="{.items[0].metadata.name}")

# Forward port 8080 on your local machine to port 80 of the nginx pod

kubectl port-forward $POD_NAME 8080:80

# In a new terminal make an HTTP request using the forwarding address

curl --head http://127.0.0.1:8080

# Switch back to the previous terminal and stop the port forwarding to the nginx pod

Forwarding from 127.0.0.1:8080 -> 80

Forwarding from [::1]:8080 -> 80

Handling connection for 8080

^C

Logs

In this section we will verify the ability to retrieve container logs.

# Print the nginx pod logs

kubectl logs $POD_NAME

Exec

In this section of the lab we will verify the ability to execute commands in a container.kubectl exec -ti $POD_NAME -- nginx -v

Services

In this section we will verify the ability to expose applications using a Service.

# Expose the nginx deployment using a NodePort service

kubectl expose deployment nginx --port 80 --type NodePort

# Retrieve the node port assigned to the nginx service

NODE_PORT=$(kubectl get svc nginx \

--output=jsonpath='{range .spec.ports[0]}{.nodePort}')

# Create a firewall rule that allows remote access to the nginx node port

gcloud compute firewall-rules create kubernetes-the-hard-way-allow-nginx-service \

--allow=tcp:${NODE_PORT} \

--network kubernetes-the-hard-way

# Retrieve the external IP address of a worker instance

EXTERNAL_IP=$(gcloud compute instances describe worker-0 \

--format 'value(networkInterfaces[0].accessConfigs[0].natIP)')

# Make an HTTP request using the external IP address and the nginx node port

curl -I http://${EXTERNAL_IP}:${NODE_PORT}

Pattern Vectors by Vecteezy